Doomslayer: Progress Roundup

Nuclear power progress, an exciting desalination milestone, radio antennae for butterflies, and more.

Economics & Development

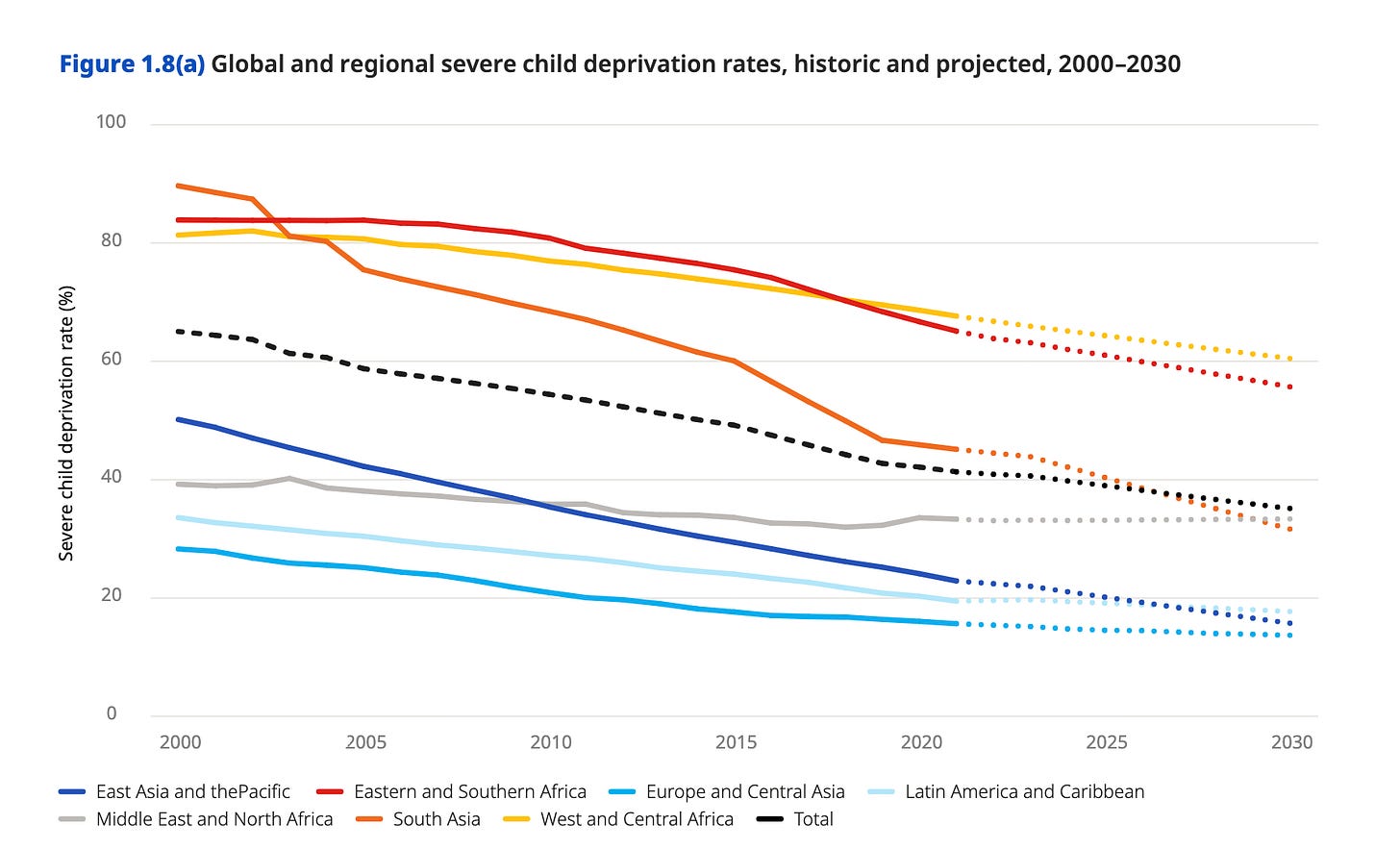

UNICEF’s latest State of the World’s Children report finds that the share of children living in “severe deprivation” fell from 65.1 percent to 40.6 percent between 2000 and 2023. Note that this measure is not based on family income, but instead on indicators related to education, health, housing, nutrition, sanitation, and water.

In ASEAN (Southeast Asian) countries, the poverty rate—defined as the share of people living under their country’s poverty line—fell to 10.8 percent in 2023, down from 13.3 percent in 2016.

Argentina’s year-over-year inflation rate was 31 percent in October, down from 193 percent a year earlier.

Seventy-three percent of women and 78 percent of men in low- and middle-income countries had financial accounts in 2024, thanks largely to mobile phones.

Energy & Environment

Conservation and biodiversity

Following a successful rat eradication, Puffins are nesting on Northern Ireland’s Isle of Muck for the first time in at least 25 years.

American crocodiles are recovering in Florida, thanks in part to a thriving nesting ground in the cooling canals of the Turkey Point nuclear plant, where biologists recorded more than 600 hatchlings this summer. The Florida population, once down to just a few hundred animals, now numbers around 2,000 statewide and is spreading back into its old territory.

Energy and natural resources

Valar Atomics has become the first company in the Department of Energy’s new pilot program to achieve a self-sustaining chain reaction in its reactor. This is a test, not a commercial deployment, but it marks early progress in an effort to bypass the normal onerous licensing process for test reactors and help younger nuclear companies bring their designs into reality.

Israel is now pumping desalinated seawater into the drought-stressed Sea of Galilee—apparently the first time in history that desalination has been used to refill a natural lake.

Rainmaker, a US startup, is attempting to use cloud seeding to increase snowfall in Utah, thereby replenishing the Great Salt Lake.

The Infinite Well: How Innovation Keeps Water Flowing

Rather than a fixed natural endowment, fresh water is largely a product of human innovation and engineering.

Health & Demographics

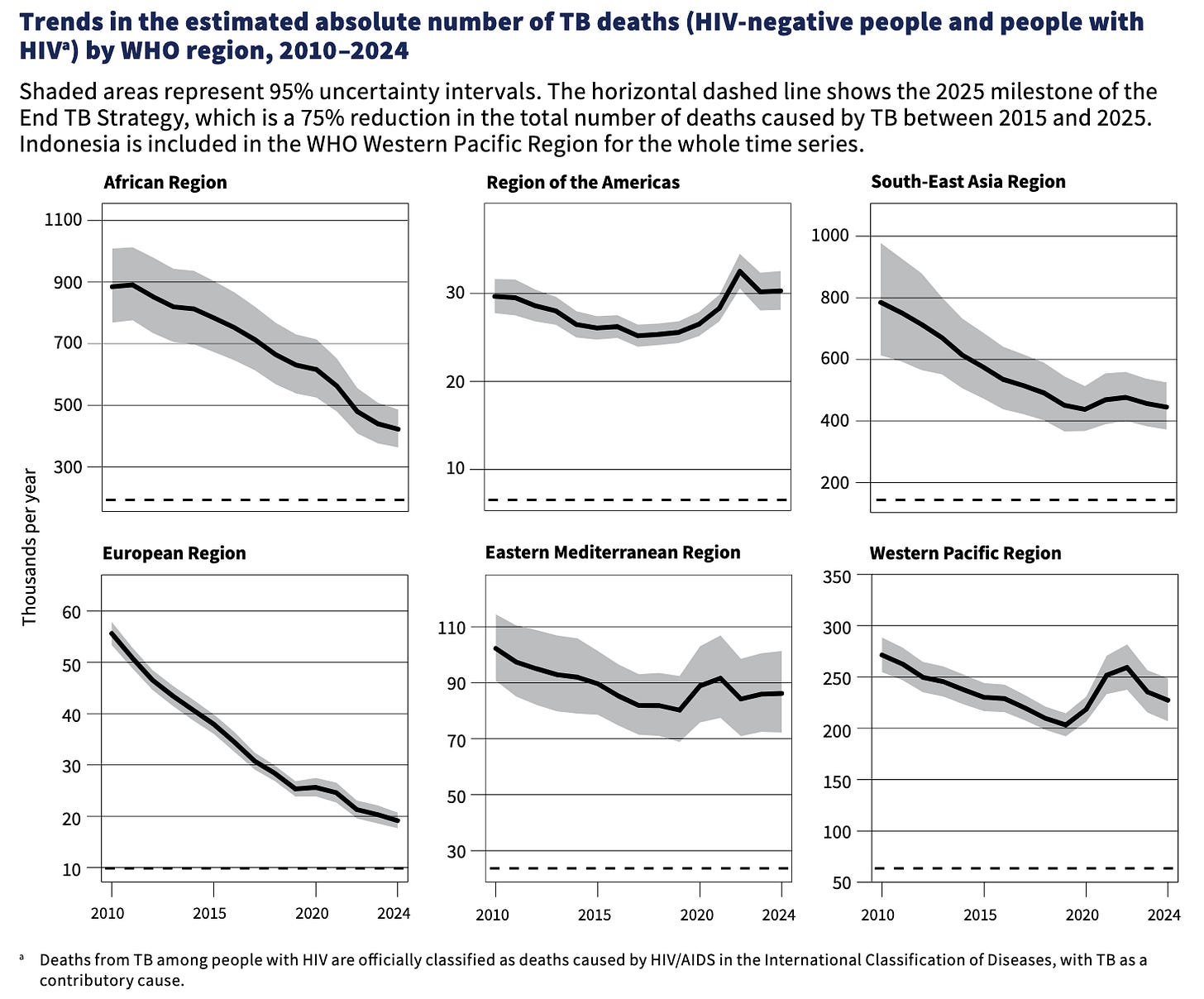

According to the World Health Organization, global tuberculosis deaths fell by over 40 percent between 2010 and 2024, from 2.13 million to 1.23 million. Africa, where a large share of tuberculosis deaths occur, saw particularly rapid progress.

The African island nations of Cabo Verde, Mauritius, and Seychelles have eliminated measles and rubella.

Science & Technology

Scientists have successfully extracted and sequenced RNA molecules from a ~40,000-year-old frozen woolly mammoth—the oldest RNA ever recovered. RNA can show which genes were active at the moment of death, providing insights about the animal’s metabolism, stress level, immune activity, and even the microbes it carried.

Researchers have built a tiny robot that can move through blood vessels, deliver a dose of drugs to a precise spot, and then dissolve. The robots hit their target in more than 95 percent of tests in pigs and sheep, offering a possible way to treat disorders like strokes or brain tumors without exposing the entire body to powerful chemicals.

Cellular Tracking Technologies, a wildlife-tracking hardware company, has built radio tags light enough to ride on a Monarch butterfly, letting scientists track individual insects across their long migration routes.

The CEO of Foxconn, the world’s largest electronics contract manufacturer, recently announced plans to deploy humanoid robots in the company’s Houston factory within “six months or so.”

Zoox, the Amazon-owned robotaxi company, is now offering rides in San Francisco, and Waymo has started operating in Miami.

Violence & Coercion

Representatives from the Democratic Republic of Congo and the Rwandan-backed M23 rebel group recently met in Qatar to lay out a preliminary plan to end their conflict, which has killed thousands of people and displaced hundreds of thousands more.

Love this!

Technically, water is not “consumed” (used up), even when people drink it. It makes its way back to nature, eventually to the oceans, where it evaporates to become rain-clouds providing fresh water.

AI (and all massive computer facilities) use water for cooling, returning the water to nature much warmer than how it was obtained. In essence, water is not being “consumed”. It is conveying the “consumed” concentrated electrical energy into dispersed heat energy. The shrinking of old and massive “vacuum tube” computer facilities down to tiny microchips has vastly reduced the “heat produced per calculation”, by a factor of a trillion, but we are doing millions of trillions of times as much calculations as back in the 1960’s.

So, we must employ revised architectures - devised specifically for AI-styles of calculations, in order to reduce the “heat footprint” such calculations impose upon the environment. These architectures, down at the microscopic chip-level, need to distribute the input data throughout the compute-fabric, rather than continually fetch it to and from RAM over data buses (an activity responsible for 95% of the energy expended in typical waves of matrix calculations.)

But I’m not worried about the water - it’s not going anywhere. It just needs to be cooled without harming the ecosystem.